Manta

EU AI Act: A Practical Guide for Companies

Articles & News

Published 12.01.2026

12 min read

© Wiktor Dabkowski / Zuma Press / ContactoPhot

A New Global Standard?

The European Union Artificial Intelligence Act (EU AI Act) represents a landmark development in the regulation of artificial intelligence, establishing the world’s first comprehensive and binding legal framework specifically designed for AI systems. Its overarching objective is to ensure that artificial intelligence placed on or used within the EU market is safe, transparent, and respectful of fundamental rights, while at the same time fostering innovation and technological competitiveness. By adopting a harmonized, risk-based approach, the EU seeks to create legal certainty for businesses and public authorities while strengthening trust in AI technologies among users and society at large.

Unlike previous regulatory initiatives that primarily relied on ethical guidelines and voluntary codes of conduct, the EU AI Act introduces enforceable obligations and significant sanctions for non-compliance. As such, it marks a transition from soft-law principles to a formal governance regime with direct implications for corporate strategy, product development, procurement practices, and internal compliance structures. For companies operating in or targeting the European market, the Act is not merely a legal instrument but a framework that will shape how AI systems are designed, deployed, and managed throughout their entire lifecycle.

The practical consequences of this framework extend far beyond companies that develop AI technologies themselves. Organizations that use or integrate AI systems into their operations, such as employers, financial institutions, healthcare providers, or public authorities, also acquire legal responsibilities as deployers of AI. Furthermore, research institutions and innovation-driven organizations must navigate a regulatory environment that balances experimental freedom with safeguards against societal harm.

The Core Structure

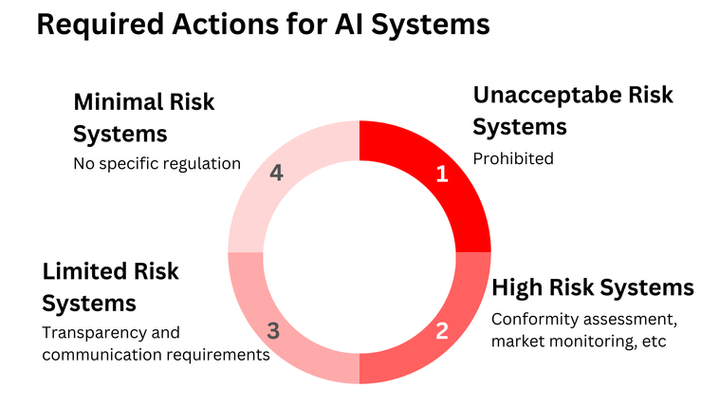

The EU AI Act is based on a risk-oriented regulatory model that classifies AI systems according to the level of risk they pose to individuals and society. At the highest level, the Act prohibits certain AI practices that are considered to create an unacceptable risk, such as systems that manipulate human behavior or enable intrusive forms of biometric surveillance. These prohibitions place clear legal limits on which AI use cases can be developed or deployed within the EU market.

The most significant obligations apply to high-risk AI systems, particularly those used in sensitive areas such as recruitment, credit assessment, healthcare, biometric identification, and education. Providers of such systems must comply with detailed requirements, including risk management processes, data governance standards, technical documentation, human oversight, and guarantees of accuracy and robustness. In many cases, conformity assessments and CE marking are required before market placement, positioning AI compliance alongside traditional product safety regulation.

For limited-risk systems, the Act focuses mainly on transparency. Companies must inform users when they are interacting with AI or when content has been artificially generated. While less burdensome, these obligations still affect system design and customer communication strategies. Most AI systems fall into the minimal-risk category and remain largely unregulated, with the EU encouraging voluntary codes of conduct.

Meaning for AI Companies

For companies that develop and market AI systems, the EU AI Act establishes a regulatory framework that significantly changes how AI products are created and managed. AI providers become the primary compliance holders, similar to manufacturers in traditional regulated sectors, and must ensure that their systems meet legal requirements before they are placed on the EU market.

A key obligation is the classification of AI systems according to the Act’s risk categories. Where a system is considered high-risk, companies must implement a lifecycle-based risk management process, addressing potential impacts on health, safety, and fundamental rights. Compliance must therefore be integrated into product design and development, rather than treated as a final legal check.

Data governance requirements place strong emphasis on the quality and appropriateness of training, validation, and testing data. AI companies must ensure that datasets are suitable for their intended purpose and that risks of bias and error are actively addressed. This elevates data management to a central legal and strategic function.

High-risk AI systems are also subject to extensive documentation and transparency obligations. Providers must be able to explain how their systems function, how risks are mitigated, and how regulatory standards are met. In most cases, conformity assessments and CE marking are required, introducing a formal approval stage that affects development timelines and market entry strategies.

The Act further requires AI systems to be designed with effective human oversight and to meet standards of accuracy, robustness, and cybersecurity. After deployment, providers must monitor system performance and report serious incidents, making compliance an ongoing responsibility.

Although these obligations increase costs and complexity, they also create opportunities. Regulatory compliance can strengthen market trust and act as a competitive differentiator. The EU AI Act thus transforms AI companies into regulated providers of high-responsibility technologies, where legal conformity becomes a core element of product quality and corporate governance.

How are non-AI companies affected?

The EU AI Act not only affects companies that develop AI systems, but also those that use them in their operations. Non-AI companies that deploy AI become legally responsible for how these systems are applied in practice, especially when they involve high-risk use cases such as recruitment, credit assessments, healthcare decisions, or customer identification.

Deployers must ensure that AI systems are used in accordance with the provider’s instructions and within their intended purpose. They are required to implement appropriate human oversight, monitor system performance, and take action if risks or errors arise. This creates a need for internal procedures that define responsibility, escalation paths, and decision-making authority when AI systems are involved.

Procurement processes are also directly affected. Companies must conduct due diligence when acquiring AI tools and assess whether suppliers comply with the EU AI Act. In practice, this leads to stronger contractual requirements, including compliance warranties, documentation access, and liability allocation between providers and users.

In addition, non-AI companies must ensure that employees who interact with AI systems are properly trained. Staff must understand the system’s limitations, how to interpret its outputs, and when human intervention is required. Transparency obligations, such as informing individuals when AI is used or when content is AI-generated, further influence customer communication and internal policies.

Moving Forward

A first practical step for all organizations is to develop a clear overview of their AI landscape. Companies should identify which AI systems they develop, use, or integrate into their processes and classify them according to the risk categories defined by the Act. This inventory forms the foundation for all further compliance efforts and enables a realistic assessment of regulatory exposure.

Second, businesses should establish internal AI governance structures. This includes assigning clear responsibilities, creating interdisciplinary teams combining legal, technical, data protection, and compliance expertise, and integrating AI oversight into existing governance frameworks. AI compliance should not be isolated but aligned with GDPR, cybersecurity, product safety, and risk management processes.

Third, procurement and vendor management practices must be adapted. Companies should require transparency from AI providers, request documentation, and include compliance-related clauses in contracts, such as warranties, audit rights, and liability provisions. This is particularly important for non-AI companies that rely on third-party systems but remain responsible for their lawful use.

Fourth, organizations should invest in documentation, monitoring, and training. Clear internal policies on AI use, continuous system monitoring, and incident response procedures are essential. Employees interacting with AI systems must be trained to understand their limitations, recognize risks, and apply effective human oversight.

From a strategic perspective, companies should view compliance not only as a regulatory burden but as a value-creating factor. Demonstrating conformity with the EU AI Act can strengthen customer trust, improve market positioning, and differentiate products and services in an increasingly regulated digital economy. Early integration of compliance into product design and business processes is likely to be more cost-effective than reactive adaptation at a later stage.

Are your AI Systems compliant and effective?

Check out our AI assurance services, or reach out for a free consultation